Investigating child sexual abuse material availability, searches, and users on the anonymous Tor network for a public health intervention strategy

GoogleAds

Surge of CSAM hosted through the Tor network

RQ1: What is the distribution volume of CSAM hosted through the Tor network?

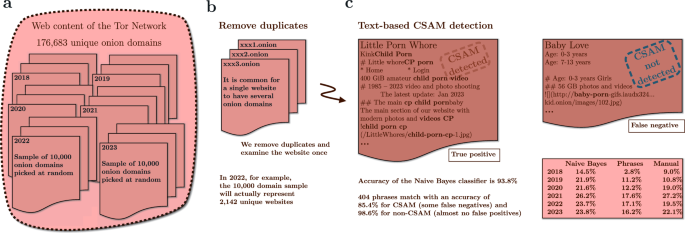

We investigate the years 2018–2023 and use a sample of 10,000 unique onion domains for each year. We then subject the text content to duplicate content filtering, fine-tuned phrase search, and supervised learning classifiers. This returns the detected CSAM percentage for each year, as shown in Fig. 2.

Figure 2

We measure the proportion of CSAM onion websites inside the Tor network in 2018–2023. (a) We use a sample of 10,000 unique onion domains for each year. (b) Many websites have several onion domains. We compare the title and sentences of the pages to detect duplicates, and restrict to a single domain if multiple domains share identical content. (c) We execute text-based CSAM detections against the content of these distinct domains. Some CSAM websites do not use explicit sexual language, and text-based detection fails. Using three separate methods – manual validation, phrase matching, and the naive Bayes classifier – we discover that the detected percentage of websites sharing CSAM is 16.2–23.8% in 2023. Comparing automated methods to human validation by hand yields consistent results (22.1% in 2023 and 19.5% in 2022). We randomly select the plain text representations of 1000 onion websites for each year, 2018–2023, and read the text content of these websites to determine whether they share CSAM and what the English vocabulary is for this type of page.

The phrase matching fails to detect anything that does not describe CSAM with the obvious phrases. Furthermore, there are real CSAM websites that do not use explicit sexual language and instead refer to content such as ‘baby love videos’. Our text-based detection does not match these websites. In addition, there are link directory websites that provide descriptions of CSAM website links. Our text-based detection matches the description phrases despite the fact that this type of website does not share CSAM, rather merely links to websites that do.

We achieve 93.8% accuracy with a basic naive Bayes classifier (see Supplementary Methods A.2). Some legal adult pornography websites, like PornHub, provide an alternative onion domain accessible via Tor. We manually review a sample set of PornHub pages, and there is no indication of anything other than adult material; therefore, we include these in our training data to teach the classifier to differentiate between legal and illegal content. The classifier performs well and can distinguish between legitimate pornography websites and unlawful CSAM websites. The accuracy is as expected and actually quite consistent with previous research (93.5%) for Tor content classification20.

We anticipate that the phrase matching method will generate few false positives due to the explicit nature of the matching phrases. It is rare for a website to contain these phrases unless it also contains CSAM, and even those exceptions are describing linked CSAM websites. We anticipate – for the same reason – that this method will generate false negatives, as it requires exact CSAM-describing language. Indeed, the matching works accordingly: its accuracy is 85.4% with CSAM websites (some false negatives) and 98.6% with non-CSAM websites (almost no false positives).

When law enforcement has seized control of CSAM servers operating through the Tor network, they have documented terabytes of content with hundreds of thousands of users (see Supplementary Discussion C.2). In a comparable manner, we find indicators that suggest the distribution of extensive CSAM collections. While we read texts from the websites, we see numerous CSAM websites that claim to share gigabytes of media and thousands of videos and images.

Using three separate methods – manual validation, phrase matching, and the naive Bayes classifier – we conclude that around one-fifth of the unique websites hosted through the Tor network share CSAM. Previous research, in 2013, found that 17.6% of onion services shared CSAM, which corresponds with our findings1.

Examining CSAM user behaviour

RQ2: What is the CSAM search volume, and what exactly are users seeking?

11.1% of the search sessions seek CSAM

We examine search chains generated by users who enter consecutive queries. We follow the searches entered by users, track queries per user, and study 110,133,715 total search sessions, and discover that 32.5% (N = 35,751,619) include sexual phrases. Finally, we find that 11.1% (N = 12,270,042) of the search sessions reveal that the user is explicitly searching for content related to the sexual abuse of children. Some of these CSAM search sessions include either ‘girl(s)’ (393,261) or ‘boy(s)’ (289,407); searching for girls is more prevalent, with a ratio of 4:3. Seeking torture material is not typical: 0.5% of CSAM search sessions (57,429) contain the terms ‘pain’, ‘hurt’, ‘torture’, ‘violence’, ‘violent’, ‘destruction’, or ‘destroy’.

During the COVID-19 pandemic and the first months of lockdowns, there was a significant surge in the user base of legal pornography websites across nations21. Surprisingly, we find that before and after COVID-19 pandemic measures (lockdowns, individuals spending more time at home), there were no significant changes in the behaviour of CSAM users (see more in Supplementary Methods A.8).

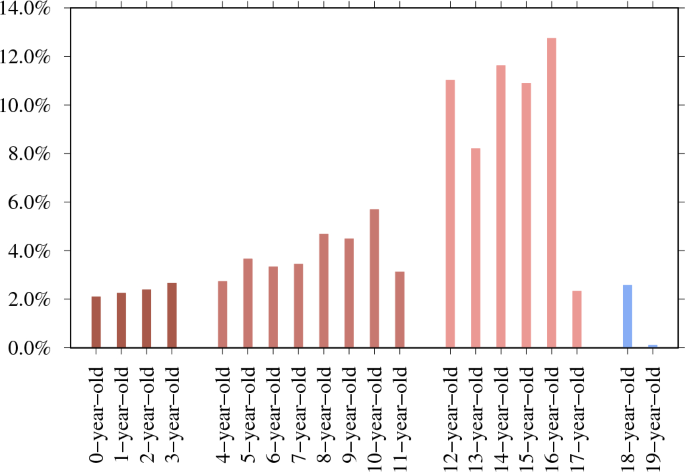

54.5% are searching for 12- to 16-year-olds

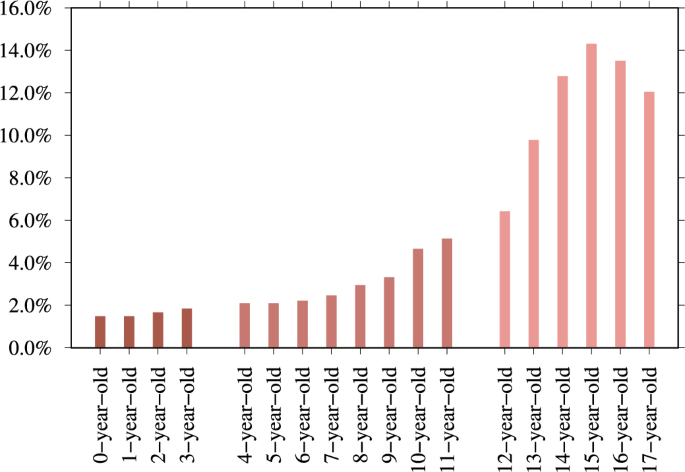

We determine if the search session reveals the exact age in which the CSAM user is interested. For example, for a 13-year-old, we count search sessions that include 13y(*), 13+y(*), 13teen, thirteen+year, 13boy(s) or 13girl(s). We use the same logic for other ages. In addition, to compare these searches for CSAM to legal adult sexual content searches, we include search sessions seeking 18-year-old (N = 12,347 from 110,133,715) and 19-year-old adults (N = 458 from 110,133,715). We find the age information in total for 479,555 search sessions. Figure 3 illustrates the age distribution of CSAM queries. This age distribution aligns with findings from previous studies22. An article titled ‘Pedophilia, Hebephilia, and the DSM-V’ finds qualitative differences between offenders who preferred pubertal and those with a prepubertal preference (a clinical trial of 881 men with problematic sexual behavior)23. The authors also note that the majority of child abuse victims are 14 years old. They concluded that the psychiatric diagnoses should be improved to include the following: sexually attracted to children younger than 11 (paedophilic type), sexually attracted to children aged 11–14 (hebephilic type), or sexually attracted to both (pedohebephilic type). Our data suggests that it may be more appropriate to observe the high percentage of individuals who have a sexual interest in 12-year-olds but not 11-year-olds. This finding is consistent with the national average age at menarche in the United States, which is 12.54 years24. Additionally, observe the decline in sexual interest that occurs across the ages of 17, 18, and 19, which indicates a distinct sexual interest in those aged 12 to 16 years old.

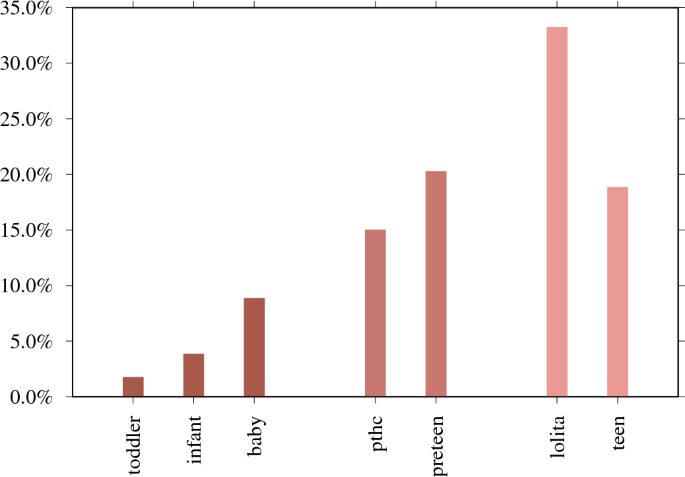

Moreover, in Fig. 4, we investigate CSAM search sessions containing age-indicating search terms and find that users are predominantly interested in 12- to 14-year-old teen content; for example, ‘lolita’ is the most popular term when compared to other age-related terms, with 33.2% (N = 746,786 of 2,287,057). In Vladimir Nabokov’s 1955 novel ‘Lolita’, a middle-aged male is sexually attracted to a 12-year-old girl and sexually abuses her. In the 1962 Stanley Kubrick film adaptation of the novel, ‘Lolita’ is 14 years old.

Figure 3

Ages between zero and 17 included in the CSAM search sessions and search sessions seeking 18-year-old and 19-year-old adults as a comparison (N = 479,555). 16-year-old (N = 61,083 of 479,555 – 12.7%) is the top-mentioned age. 54.5% of age-revealing searches (N = 261,162 of 479,555) target those aged 12–16 years old. Outside of this age range, the interest declines.

Figure 4

In the context of explicit CSAM search sessions, there are a total of 2,287,057 broad age-indicating searches with terms ‘toddler’, ‘infant’, ‘baby’, ‘pthc’ (preteen hardcore), ‘preteen’ (preadolescence, ages between nine and 12), ‘lolita’ (refers to a girl around 12–14 years old), and ‘teen’ (when included with CSAM terms).

How accessible is CSAM using Tor?

Based on result click statistics, we rank the top 26 most visited search engines online on 17 March 2023. To determine whether CSAM is permitted in search results, we test the searches ‘child’, ‘sex’, ‘videos’, ‘love’, and ‘cute’, then study the search results. 21 out of 26 search engines provide CSAM results. Four search engines even promote and advocate CSAM. One of them even states that ‘child porn’ is the number one search phrase. It is positive that five Tor search engines attempt to block CSAM. Yet, a user can utilise these search engines to locate other search engines and ultimately locate CSAM through the latter. Even if search engines block sites that directly share CSAM, it is still possible to find other entry points for onion sites that provide links to CSAM websites. With any major Tor website entrypoint, search engine, or link directory, a Tor user is only a few clicks away from CSAM content.

Self-help services are reaching users

When we study the searches, we discover that there are a few hundred queries from people who want to cease viewing CSAM and are concerned about their sexual interest in children, including queries ‘overcome child porn addiction’ and ‘how to stop watching child porn’ (see more in Supplementary Methods A.6).

When a person searches for CSAM, three prominent Tor search engines provide only links to self-help programmes for those who are concerned about their thoughts, feelings, or behaviours. The intervention of CSAM searches directs individuals away from CSAM and towards help. Data from one of the self-help websites indicates that CSAM users actively visit the website, and those who start the self-help programme are very likely to continue following the programme (see more in Supplementary Methods A.6). In the next section, we show that when we present a survey for those who search for CSAM, many reply with motivation to stop using CSAM.

Intervention for CSAM users

RQ3: What does the survey reveal about CSAM users?

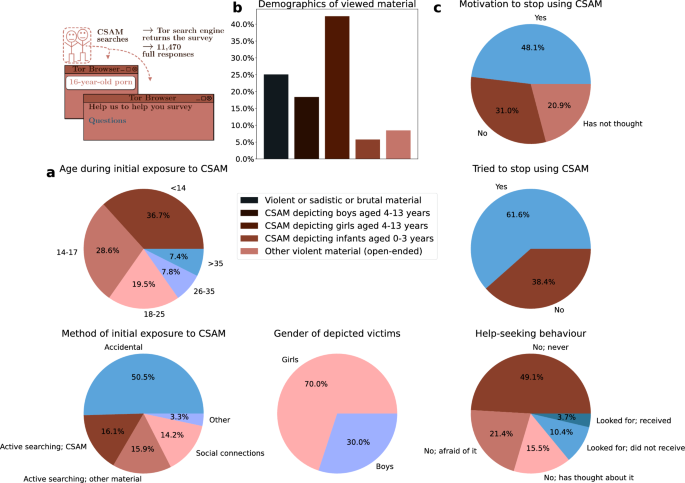

Figure 5

Our anonymous survey received responses from 11,470 individuals who sought CSAM on three popular Tor search engines. (a) The survey results indicate that 65.3% (N = 7199 of 11,030 who replied to the question) of CSAM users first saw the material when they were under 18 years old. 36.7% (N = 4048 of 11,030) first saw CSAM when they were 13 years old or younger. 50.5% (N = 4843 of 9599 who replied to the question) report that they first saw CSAM accidentally. (b) We asked the respondents what types of images and videos they view. Viewing CSAM depicting girls is more prevalent, with a ratio of 7:3. (c) The survey results indicate that 48.1% (N = 4120 of 8566 who replied to the question) of CSAM users are willing to change their behaviour to stop using CSAM, and 61.6% (5200 of 8447 who replied to the question) have tried to stop using CSAM. However, only 14.0% (N = 985 of 7013 who replied to the question) of CSAM users have sought help to stop using CSAM, and an even smaller portion of 3.7% (N = 257) have actually received help. Notably, 21.4% (N = 1498) are afraid to seek help.

Individuals who seek CSAM on Tor search engines answered our survey that aims at developing a self-help programme for them. Figure 5 aggregates statistics from the responses.

Most CSAM users were first exposed to CSAM while they were children themselves, and half of the respondents (N = 4843 of 9599 who replied to the question) first saw the material accidentally, demonstrating the accessibility and availability of CSAM online. Exposure to sexually explicit material in childhood is associated, inter alia, with risky sexual behaviour in adulthood25, sexual harassment perpetration26, and the normalisation of violent sexual behaviour27, and has been defined as an adverse childhood experience and a form of noncontact sexual abuse28.

We ask for information regarding two age ranges in the survey: zero to three years and four to 13 years. We structured the question with the intention of focusing on pre-pubescent children, aged 0–13. Respondents were able to specify the age in the option ‘Other violent material, what?’ The majority (60.7%, N = 5342 of 8796 who replied to the question) of respondents say they view CSAM depicting girls or boys aged between four and 13 years, indicating a preference for images and videos depicting prepubescent and pubescent children. Of the respondents, 69.7% (N = 3725 of 5342) say they view girls, compared to 30.3% (N = 1617 of 5342) who view boys. There is also a small group 5.8% (N = 506) of CSAM users with a preference for CSAM depicting infants and toddlers aged between zero and three years old. Additionally, 25.1% (N = 2205) reported viewing images and videos related to violent or sadistic and brutal material.

8.4% (N = 743) of respondents say they view ‘other violent material’, and 458 provide explanatory open-ended responses. 61.6% (N = 282) of these responses explicitly mention the age of children depicted in the CSAM viewed, providing N = 1637 mentions of age, as Fig. 6 illustrates. Most responses refer to age brackets, for example ‘over 12 years old’, in which we define this to mean 12–17. The most common age is 15-year-old (N = 234), followed by 16-year-old (N = 221), and 14-year-old (N = 209).

The survey data provides an age distribution that appears similar but is distinct from the search data (see statistical tests in Supplementary Methods A.5). The survey responses give the age ranges that respondents say they are interested in – whereas the search sessions reveal the precise age that people are most interested in. 54.5% of age-revealing CSAM search sessions target sexual content aimed at 12- to 16-year-olds. This survey’s age distribution yields an almost identical percentage: the range between 12 and 16 years old accounts for 56.8% (N = 929 mention age in this range from all 1637 mentioned ages).

Figure 6

282 of the open-ended responses explicitly mention the age of children depicted in the CSAM viewed, providing 1637 mentions of age.

Our analysis of 458 open-ended responses for ‘Other violent material, what?’ supports the prevalence of viewing material depicting girls. 33.8% (N = 155) of the 458 open-ended responses explicitly mention the gender of children. 91.6% (N = 142) of the responses that mention gender refer to girls, and 30.3% (N = 47) refer to boys. 21.9% (N = 34) of the responses mention both girls and boys. Considering the quantitative and qualitative data together (N = 5488), viewing CSAM depicting girls (N = 3839, 70.0%) is more prevalent than viewing CSAM depicting boys (N = 1649, 30.0%), with a ratio of 7:3.

Overall, these findings are consistent with the latest Internet Watch Foundation’s Annual Report 2022 (see Supplementary Methods A.9).

CSAM users do want assistance

The Prevention Project Dunkelfeld offers clinical and support services to men who experience sexual attraction towards children and reaches these individuals with media campaigns29. Similarly, in collaboration with some legal adult pornography websites, including Pornhub, the Stop It Now! organisation alerts users who conduct CSAM searches of the illegality of their actions and directs them to help resources21.

Our results verify the feasibility of this type of intervention: a large proportion of CSAM users report that they want and have tried to change their behaviour to stop using CSAM. Almost half of the respondents report wanting to stop viewing CSAM monthly, weekly, or nearly every time (48.1%, N = 4120 of 8566 who replied to the question), and the majority of respondents report having tried to stop (61.6%, N = 5200 of 8447 who replied to the question). 31.0% (N = 2656) say that they do not want to stop, and 20.9% (N = 1790) say they have not thought about it.

Despite self-reported willingness and attempts to change behaviour, the fact that they are responding to the survey is evidence of their continued search for CSAM – albeit temporarily stepping away from CSAM to contemplate the concerns posed in the survey and provide a response. This raises the question of the commonalities between CSAM use and addiction. While addiction to the internet is not listed as a diagnostic disorder in the Diagnostic And Statistical Manual Of Mental Disorders, Fifth Edition, Text Revision (DSM-5-TR)5, there is extensive debate over whether problematic use of the internet – in particular in the context of legal pornography and CSAM use – can be considered an addiction. The International Classification of Diseases 11th Revision (ICD-11) includes compulsive sexual behaviour disorder, characterised by ‘persistent pattern of failure to control intense, repetitive sexual impulses or urges resulting in repetitive sexual behaviour’ – including repetitive use of legal pornography30.

Repetitive pornography use has similar effects to substance addiction31. Many people continually use CSAM and display addictive behaviours32, and the intensity of CSAM use often has properties that users call addictive33. In the search engine data, we notice that users who seek help often refer to their condition as ‘child porn addiction’. Understanding the commonalities between CSAM use and addiction is beneficial to prevention and treatment.

Help-seeking behaviour among CSAM users

Despite many respondents reporting that they would like to stop using CSAM, help-seeking behaviour among CSAM users remains low. Only 14.0% (N = 985 of 7013 who responded to the question) of CSAM users have sought help. Many report that they feel afraid to seek help (21.4%, N = 1498 of 7013), and the majority 64.6% (N = 4530 of 7013) report that they have not sought help.

Of those who have actively sought help to change their illicit behaviour, 73.9% (N = 728 of 985) have not been able to get help. This population has an unmet demand for effective intervention resources34. This may be due to a lack of awareness of the resources available35 or because the available resources are not desirable. Recent studies34,35,36 found the following barriers to seeking and receiving psychological services for child sexual offenders and people concerned about their sexual interest in children: fear of legal consequences; fear of stigmatisation; shame; affordability; and a perceived or actual lack of understanding by professionals.

The unmet demand for help resources demonstrates the urgent need for investment and further implementation of perpetration prevention programmes in order to effectively reach those who require intervention37.

Through bivariate analysis, we examine the associations between a number of covariates and the outcome of help-seeking in the survey data. We find the following to be determinants of help-seeking: duration and frequency of CSAM use; depression, anxiety, self-harming thoughts, guilt, and shame. We have only included the respondents with non-missing answers; for all results on determinants of help-seeking, see Supplementary Methods A.4 and Supplementary Tables.

Individuals who have used CSAM for a longer duration and those who use it more frequently are more likely to actively seek assistance to change their behaviour. There is an opportunity to intervene with those who have been viewing CSAM for a shorter duration in order to increase help-seeking at an earlier stage. Those who use CSAM more frequently may be more likely to seek help due to the detrimental impact that frequent use of CSAM may have on an individual’s daily life, including impaired social and occupational functioning and deep distress, which may be a strong motivator to seek help to reduce use of CSAM in order to improve life situations. Common reasons for seeking help for substance abuse include habitual use, taking a substance for a long time, and a need to take it daily38. Such driving factors for help-seeking appear to be similar in this sample of CSAM users. Respondents who face more difficulties in carrying out ordinary daily routines and activities are more likely to have sought help to stop using CSAM. Those who experience such difficulties daily have one of the highest rates of help-seeking.

RQ4: How can search engine-based intervention reduce child abuse?

We demonstrate that not only are CSAM websites widely hosted through the Tor network, but that they are also actively sought. However, in contrast, instead of watching CSAM, individuals voluntarily participate in the search engine-prompted survey. Consequently, even this intervention reduces CSAM usage.

We propose an intervention strategy based on our observation that some CSAM users do indeed recognise their problem. Even when CSAM users are seeking CSAM, they are willingly visiting self-help pages and continuing to study cognitive behavioural therapy information. Search engines, which are the main way people find onion sites, should start filtering CSAM and diverting people towards help to stop seeking CSAM. This is technically possible because of the accurate, text-based detection of CSAM that we demonstrate, and furthermore, the CSAM phrase detection list can be shared between search engines.

Source : https://www.nature.com/articles/s41598-024-58346-7

Author :

Date : 2024-04-03 07:00:00

GoogleAds